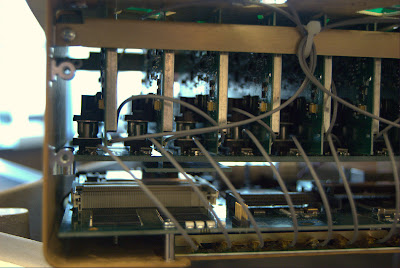

Robotic ArmThis robot had a camera and em field sensors in its hand, and could detect the presence of an object within grasping distant. Some objects it had been trained to recognize, and others it did not but would attempt to grab anyway. Voice synthesis provided additional feedback- most humorously when it accidently (?) dropped something it said 'oops'. Also motor feedback sensed when the arm was pushed on, and the arm would give way- making it much safer than an arm that would blindly power through any obstacle.

It's insane, this application level taintI don't really know what this is about...

Directional phased array wireless networkingThe phased array part is pretty cool, but the application wasn't that compelling: Using two directional antennas a select zone can be provided with wireless access and other zones not overlapped by the two excluded. Maybe if it's more power efficient that way?

This antenna also had a motorized base, so that comparisons between physically rotating the antenna and rotating the field pattern could be made.

Haptic squeezeThis squeeze thing has a motor in it to resist pressure, but was broken at the time I saw it. The presenter said it wasn't really intended to simulate handling of real objects in virtual space like other haptic interfaces might, but be used more abstractly as a interface to anything.

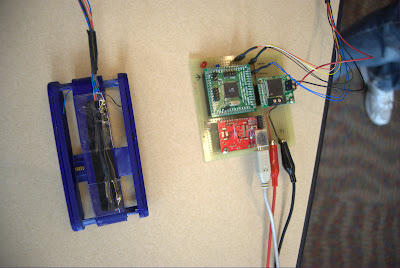

RFID AccelerometerThis was one of two rfid accelerometers- powered entirely from the rfid antenna, the device sends back accelerometer data to rotate a planet on a computer screen. The range was very limited, and the update rate about 10 Hz. The second device could be charged within range of the field, then be taken out of range and moved around, then brought back to the antenna and download a time history (only 2 Hz now) of measurements taken. The canonical application is putting the device in a shipped package and then reading what it experienced upon receipt.

Wireless Resonant EnergyThis has had plenty of coverage elsewhere but is very cool to see in person. Currently moving the receiver end more than an inch forward or back or rotating it causes the light buld to dim and go out.

Scratch InterfaceA simple interface where placing a microphone on a surface and then tapping on the surface in distinct ways can be used to control a device. Also a very simple demo of using opencv face tracking to reorient a displayed video to correct for distortion seen when viewing a flat screen from an angle.

Look around the buildingI found myself walking in a circle and not quite intuitively feeling I had completed a circuit when I actually had.

Also see

another article about this, and Intel's

flickr photos, and a

a video.