http://getsatisfaction.com/livelabs/topics/pointcloud_exporter

What to do

Get Wireshark http://www.wireshark.org/

Allow it to install the special software to intercept packets.

Start Wireshark. Put

http.request

into the filter field.

Quit any unnecessary network activity like playing youtube videos- this will dump in a lot of data to wireshark that will making finding the bin files harder.

*** Update ***

Some users have found the bin files stored locally in %temp%/photosynther, which makes finding them much easier than using Wireshark, but the for me on Vista the directory exists for one user but not others- but the bin files have to be stored locally somewhere right?

***

Open the photosynth site in a browser. Find a synth with a good point cloud, it will probably be one with several hundred photos and a synthiness of > 70%. There are some synths that are 100% synthy but have point clouds that are flat billboards rather than cool 3D features- you don't want those. Press p or hold ctrl to see the underlying point cloud.

*** Update ***

Use the direct 3d viewer option to view the synth, otherwise you won't get the synth files. (thanks losap)

***

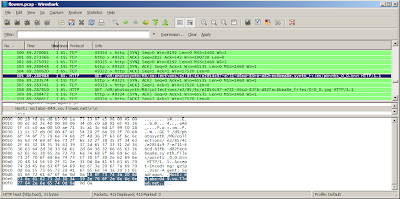

Start a capture in Wireshark - the upper left butter and then click the proper interface (experiment if necessary).

Hit reload on the browser window showing the synth. Wireshark should then start show ing what files are being sent to your computer. Stop the capture once the browser has finished reloading. There may be a couple screen fulls but near the bottom should be a few listings of bin files.

Select one of the lines that shows a bin file request, and right-click and hit Copy | Summary (text). Then in a new browser window paste that into the address field. Delete the parts before and after /d8/348345348.../points_0_0.bin. Look back in Wireshark to discover what http address to use prior the that- it should be http://mslabs-nnn.vo.llnwd.net, but where nnn is any three digit number. TBD- is there a way to cut and paste the fully formed url less manually?

If done correctly hit return and make the browser load the file- a dialog will pop up, save it to disk. If there were many points bin files increment the 0 in the file name and get them all. If you have cygwin a bash script works well:

for i in `seq 0 23`

do

wget http://someurl/points_0_$i.bin

done

Python

Install python. If you have cygwin installed the cygwin python with setup.exe, otherwise http://www.python.org/download/ and download the windows installer version.

*** UPDATE *** It appears the 2.5.2 windows python doesn't work correctly, which I'll look into- the best solution is to use Linux or Cygwin with the python that can be installed with Linux ***

Currently the script http://binarymillenium.googlecode.com/svn/trunk/processing/psynth/bin_to_csv.py works like this from the command line:

python bin_to_csv.py somefile.bin > output.csv

But I think the '>' will only work with cygwin and not the windows command prompt. I'll update the script to optionally take a second argument that is the output file.

If there are multiple points bin files it's easy to do another bash loop to process them all in a row, otherwise manually do the command above and create n different csvs for n bin files, and then cut and paste the contents of each into one complete csv file.

The output will be file with a long listing of numbers, each one looks like this:

-4.17390823, -1.38746762, 0.832364499, 24, 21, 16

-4.07660007, -1.83771312, 1.971277475, 17, 14, 9

-4.13320493, -2.56310105, 2.301105737, 10, 6, 0

-2.97198987, -1.44950056, 0.194522276, 15, 12, 8

-2.96658635, -1.45545017, 0.181564241, 15, 13, 10

-4.20609378, -2.08472299, 1.701148629, 25, 22, 18

The first three numbers are the xyz coordinates of a point, and the last three is the red, green, and blue components of the color. In order to get a convention 0-255 number for each color channel red and blue would have to be multiplied by 8, and green by 4. The python script could be easily changed to do that, or even convert the color channels to 0.0-1.0 floating point numbers.

Point Clouds - What Next?

The processing files here can use the point clouds:

http://binarymillenium.googlecode.com/svn/trunk/processing/psynth/

Also programs like Meshlab can use them with some modification- I haven't experimented with it much but I'll look into that and make a post about it.

animated gif of height map

animated gif of height map